Introduction

Nowadays online video ads is an usual practice for everybody. We're used to obtain tailored ads without wondering how this ads were selected and delivered. This paper considers some technology aspects of the real-time bidding (RTB) process, and it may be useful for those who have just started to research similar technologies as a waymark, as well as for those who have already implemented similar solutions.

Various studies assess that the global revenue in the video advertising segment amounted to $27,799 million in 2018, and it's expected to show an annual growth rate of 14.6% in the next four years [1]. The average revenue per internet user currently amounts to $9.34. And it's not a surprising finding. Just consider two facts: (a) about three quarters of the internet traffic is video-based and expected to grow to 80% in 2019 [2], and (b) more than a half of marketing professionals name video as the type of content with the best ROI. Indeed, the four-and-a-half-year-old forecast made by The Guardian’s experts has proved to be correct [3].

Real-time bidding (RTB) stands for “a means by which advertising inventory is bought and sold on a per-impression basis, via programmatic instantaneous auction”. Although this technology is already widespread and the rules are seemingly set, it is still evolving. Let's note some fundamental features of RTB that add value to online video advertising.

a) online ad impression as a commodity is sold on the real-time auctions, where multiple buyers (simultaneously or in certain order) may bid for it;

b) contextual targeting [4], ad content is delivered to end-user’s device when he/she is considered a part of target auditory for the advertiser based on his/her characteristics (e.g., geographic location, site or app category, indirectly estimated income);

c) interactivity of ad delivery process (i.e., ad impression events are tracked, which gives evidence and detailed information for end-user’s behavior analysis, like «skipping»).

These points distinguish RTB from Programmatic Advertising and provide both sellers and buyers with extra opportunity to increase their income. For instance, interactivity provides a mechanism to track “skipping” that is significant to analyze end-user attitude to the brand, particularly on Youtube, where skip ratio is half of all impressions [5].

To participate in RTB either as a seller (supply side) or a buyer (demand side), you should use one of existing “platforms” (Ad Exchanges, Supply Side Platforms, Demand Side Platforms) [6] or introduce a new one that will support certain standards for data transactions. The following IT giants are among the key players on this market: AOL, Google, Microsoft, and Facebook. As RTB is desirable both for companies already involved in advertising industry and for freshcomers, the number of platforms has been increasing dramatically.

Let's make a note on standards. The Interactive Advertising Bureau (IAB) gathers over 650 leading media and technology companies to collaborate on common rules for programmatic advertising, it develops and shares technical standards and best practices. Standards allow various components (services running on servers, as well as software (players) from end users) to communicate with each other perfectly in the process of exchanging requests. We may consider the RTB process data processing and transfer (requests exchange among servers) among numerous servers (or even clusters), implemented by the means of certain hardware and software solutions. At the level of an individual server, there is a huge number of incoming requests (from supply side partners) to be processed, and derived requests should be formed to send further (to demand side partners) in order to receive and process responses, and to consequently form its own responses to the initial incoming requests [7]. Moreover, each derived request may lead to a cluster of new derived requests on partners' servers.

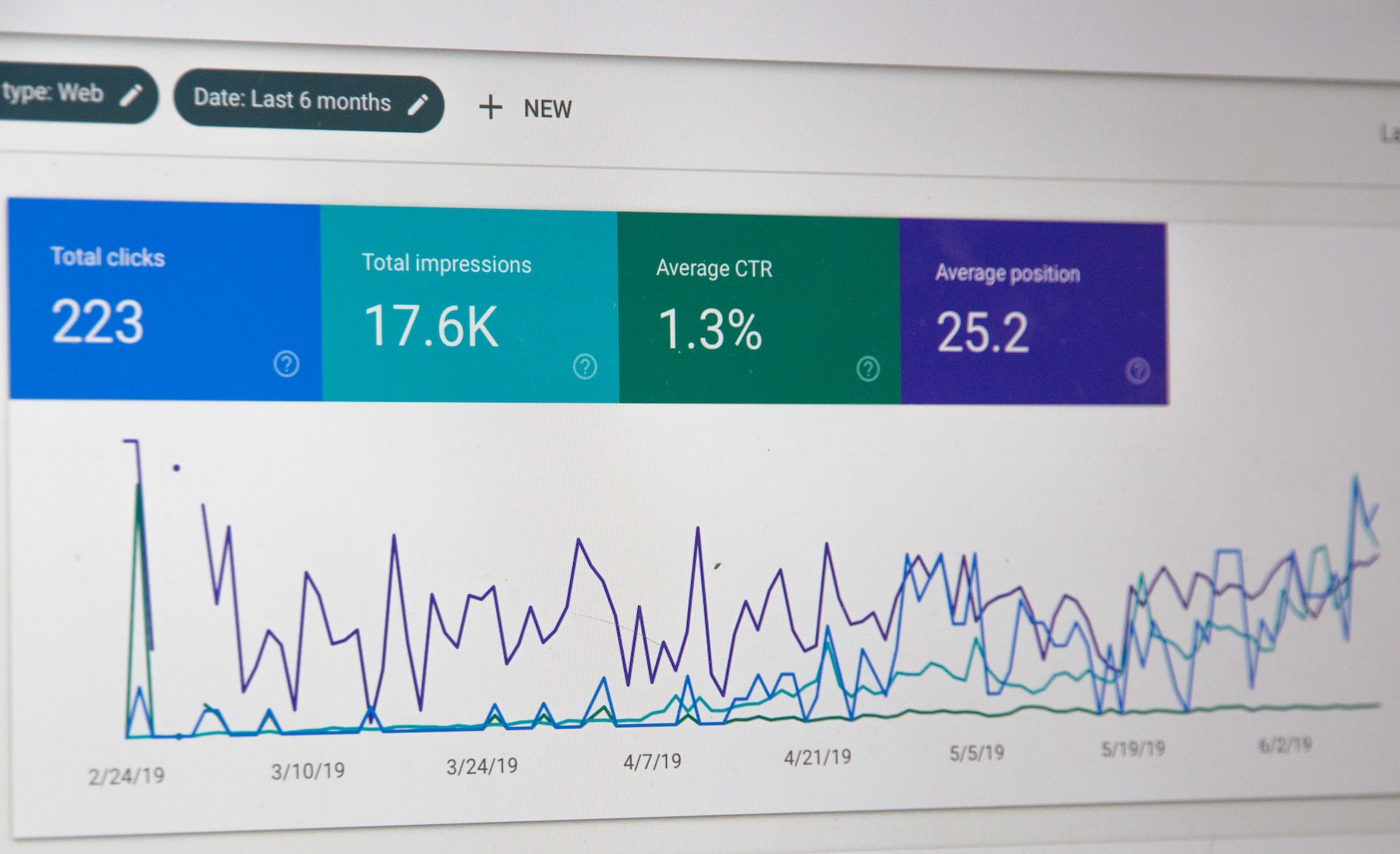

RTB information exchange processes (picture to be drawn)

As the basic idea of online video ads and RTB is given in the introductory section above, we may move forward to describe the RTB solution. Firstly, an overview of «system architecture» will be given for the larger picture, and consequently, every major component will be discussed to give some details of technologies used.

Architecture overview

The general principles of high load system design were kept in mind during the architecture design stage. The system should be implemented with the following features:

a) high scalability (able to cope with traffic, connection number, or database size growth by performing horizontal and vertical scaling upgrades),

b) decomposition into services,

c) high availability and design for fault tolerance (allowing redundancy and monitoring to continue operation even if a part of the system fails),

d) each component could be implemented by different technologies (programming languages, etc.), and, finally,

e) openness (all external interconnections are implemented under standards for RTB platforms).

Hundreds thousand of hours may be spent to design and implement such a system. Therefore it's preferable to use available and well-tested open-source solutions. We consider Hadoop stack [8] the technological base for our solution. Furthermore, we've exploited Hortonworks DataFlow (HDF) [9] and used Stream Analytics Manager (SAM) [10] to set up and manage the needed Hadoop components.

Component Groups

All components can be split into 3 groups: (a) Analytics, (b) RTB Services, and (c) Administration.

RTB Services group receives requests from supply partners, processes those requests, forms derivative requests to demand partners, collects their responses, and streams data to the Analytics group. In its turn, Analytics group performs collection, storage, and processing of streamed data and provides aggregated data at the Administration group's request. The latter group contains tools and UIs for monitoring and administration.

Architecture overview (components and data flow)

As of now, VAST/VPAID and OpenRTB standards are the most commonly used for real-time bidding video ads, therefore ad platforms communicate using HTTP/HTTPS requests, and the POST responses contain XML or JSON payloads according to the chosen standard. As a rule, the content of POST responses is zipped (preferrably with gzip content encoding), which decreases network traffic but increases server computational loads.

Components overview

Let's consider every component of the scheme.

A. Analytic Services

A.1. Stream Analytics Manager

Stream Analytics Manager (a part of Hortonworks DataFlow) provides UI to create applications, connecting components of the Analytic group. It's a quite simplified process to create new applications in the SAM. All you need is to get needed components form Hadoop stack and set up their parameters. Some of these components are designated as data sources, some others as data sinks.

A.2. Distributed Streaming Platform

Distributed Streaming Platform is used to receive and temporary store streaming data. Withing Hadoop technologies stack, there's Kafka. It provides options to receive data simultaneously on several partitions (parallel streaming), also providing replication (redundancy).

A.3. Distributed Data Store

Druid [11] is a column-oriented open-source distributed data store. It is designed to quickly absorb massive quantities of event data and provide low-latency queries on top of the data.

Druid runs as a cluster of specialized processes (called nodes) to support a fault-tolerant architecture where data is stored redundantly. The cluster includes external dependencies for coordination (Apache ZooKeeper), metadata storage (DBMS, e.g. MySQL, PostgreSQL, or Derby), and a deep storage facility (e.g. HDFS or Amazon S3) for permanent data backup.

B. RTB Services

The RTB Services group contains several types of services, running within a single or several clusters. Each cluster may be implemented either as a single high performance physical server or as a set of physical servers located nearby (within the same LAN).

We designate several subgroups within the RTB Services group, such as

(1) load balancer,

(2) bidder services,

(3) manager service,

(4) event trackers, and

(5) loggers.

The services were designed with consideration of the possibility to be distributed among different machines (servers), while each cluster should contain all types of services. Let's consider the said subgroups further.

Also, we can define several types of events in our architecture:

(a) a continuous flow of (incoming) requests to the bidder services (operated by the load balancer and consequently by the bidder services),

(b) derived requests to and responses from partner servers as a result of incoming requests (generated and operated by the bidder services),

(c) episodic events like configuration changes, triggered manually by the administrator or occurring due to some automatic rule handler (operated by the manager service), and

(d) tracking events (impression tracking, etc).

B.1. The load balancer

The load balancer is a front component of the architecture. It receives requests from the “outside world” clients and aims to balance loads among several bidder services (running on a single or multiple physical servers). Thus, the load balancer performs optimization of the loads and consequently increases the availability and decreases the mean response time. Using redundancy of the bidder services (the components that duplicate the same functions), the reliability of the system increases (redundancy within one physical server increases the availability factor), while redundancy at the level of the physical servers increases the system's fault tolerance against hardware failures.

We suggest using the Nginx service as the load balancer due to its proven high performance and reliability [12]. It is flexible to be set up as it's deemed appropriate, to cope with a variety of cluster configurations (the number of physical servers and distribution of the services among them).

B.2. The bidder services

The bidders receive OpenRTB and VAST/VPAID requests from the “outside” clients through the load balancer. Each bidder service runs and listens to a designated port. The API is implemented in such a way that the bidder service receives HTTP/S requests transmitted from the load balancer and operates these requests, afterwards a number of outgoing derived requests is sent to the relevant clients in order to receive their responses and form its own response to the initial incoming request. Also, obviously, the bidder services collect transaction data into a temporary buffer and periodically stream it to the logger service.

The aim of the bidder server is to form an OpenRTB or VAST/VPAID (depending on the chosen standard) response with a suitable ad content and send it back. The key word is “suitable”. It means that we analyze the information obtained with the initial request and select those ad demand partners that could be interested in the opportunity.

It was a technological challenge to design and implement such bidder services that meet the high QPS requirements under high loads. We should point out two related problems here:

(a) a huge number of open connections should be handled simultaneously,

(b) eachrequest should be operated within some reasonable time interval (the mean time for request processing), but it depends on the derived requests.

The first point is a well-known C10k Problem [13], and many a useful advice may be found in literature [14]. The second one is partially solved by a combination of increased software solution efficiency and hardware power, but it significantly depends on the mean response time for the derived requests.

In order to communicate with the partners’ servers, the bidder services should be informed about their connection parameters (host, API path, protocol, etc.). While running, the bidder services use RAM to store the demand and supply parameters, and this is much faster compared to the file storage DBMS solution.

B.3. The manager service

Regular changes of demand and supply oblige bidder services to use updated data in their operations. Therefore, our architecture contains a designated manager service, aiming to:

(a) start, stop, and restart the bidder services (therefore, the manager service may change the number of bidder services),

(b) watch for configuration changes on the Registry Service and update the local configuration files,

(c) force the bidder services to reload the configuration,

(d) test the bidder services.

B.4. The event trackers

The event trackers receive requests from the end-user device, and in this way signal about the impression. Although we expect to receive requests from the real end-users’ devices, these requests are to be investigated for validity in order to eliminate a possible fraud flood.

B.5. The logger services

The logger services receive log message packages from the bidder services and event trackers, convert those messages into the AVRO format, and stream to Kafka. AVRO compression was chosen to decrease network traffic, while scheme validation mechanism is based on a single Schema Registry service [15].

C. Administration

Admin UI

To manage parameters of the RTB services, store and edit data about partners' servers (request parameters), as well as to visualize the analytical data, we have created the Admin UI. This component is not loaded so high as compared with the RTB services or the Analityc group components. Therefore, we were regarding user-friendliness and security as the primary criteria when choosing the technology to implement the Admin UI, and Laravel [16] framework was seen as the most suitable option.

Services monitoring

It's required to provide tools for monitoring of services both on Data-Lake and Bidder-Lake. To monitor components of Data-Lake, we have used Ambari [17]. It simplifies management and monitoring of services.

To monitor Bidder-Lake, we have used Zabbix [18]. Its client was installed on the monitored server, and a Zabbix server was installed separately within Data-Lake to provide monitoring data UI. Moreover, Zabbix was set up to send messages to our Telegram group whenever there's any services malfunction.

Also, obviously, during the early development stages, we performed a series of high load simulations on our services and collected the monitoring data using Apache benchmark tools (AB and JMeter [19], [20]).

Conclusion

This paper considers some technology aspects of the real-time bidding (RTB) process, and it may be useful for those who have just started to research similar technologies as a waymark, as well as for those who have already implemented similar solutions.

Finally, we'd like to share several findings accumulated from our experience.

• Firstly, although there are many RTB solutions already available and the platforms use different technologies, all transactions among servers are operated under common standards. The system should behave as it's recommended by the IAB standards for OpenRTB and VAST/VPAID.

• Secondly, it's preferable to use proven open-source solutions. This will give consistency to your solution.

• Thirdly, the principles of high load system design should be sound in every component of your solution.

• Lastly, the use of virtual machines for servers will dramatically decrease the system performance.

Mykhailo Makhno, Dmytro Biriukov